Table Of Content

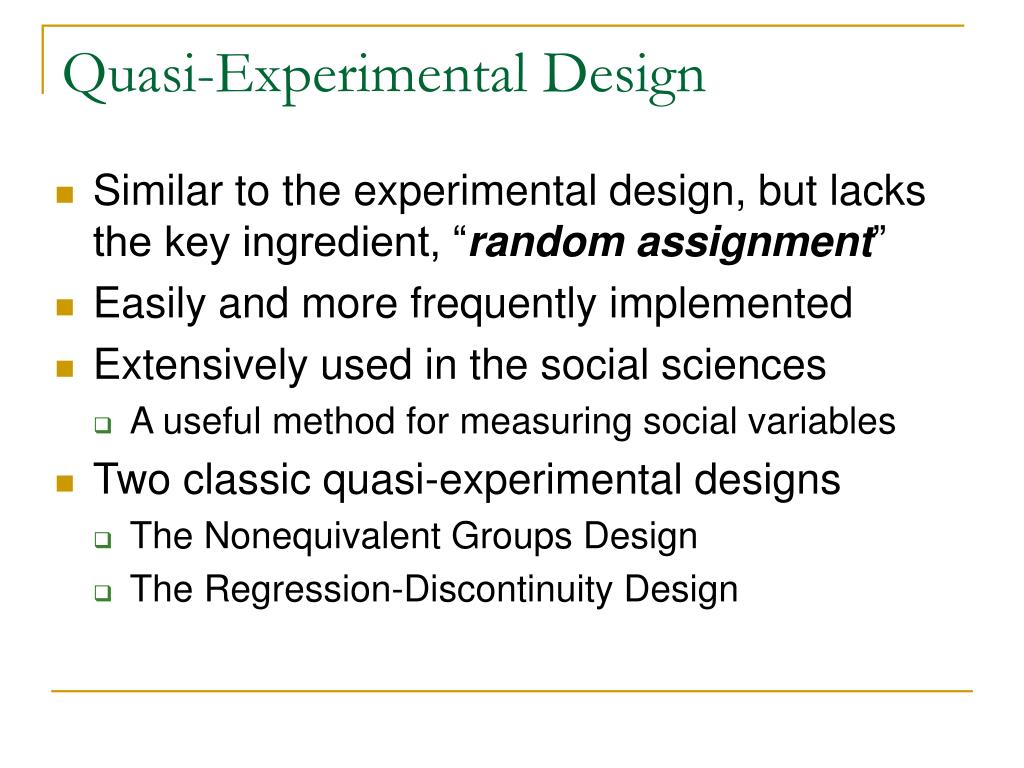

It has been observed that it is more difficult to conduct a good quasi-experiment than to conduct a good randomized trial (43). Although QEDs are increasingly used, it is important to note that randomized designs are still preferred over quasi-experiments except where randomization is not possible. In this paper we present three important QEDs and variants nested within them that can increase internal validity while also improving external validity considerations, and present case studies employing these techniques. One of the strengths of QEDs is that they are often employed to examine intervention effects in real world settings and often, for more diverse populations and settings. Consequently, if there is adequate examination of characteristics of participants and setting-related factors it can be possible to interpret findings among critical groups for which there may be no existing evidence of an intervention effect for. For example in the Campus Watch intervention (16), the investigator over-sampled the Maori indigenous population in order to be able to stratify the results and investigate whether the program was effective for this under-studied group.

Quasi-experimental Designs without Control Groups

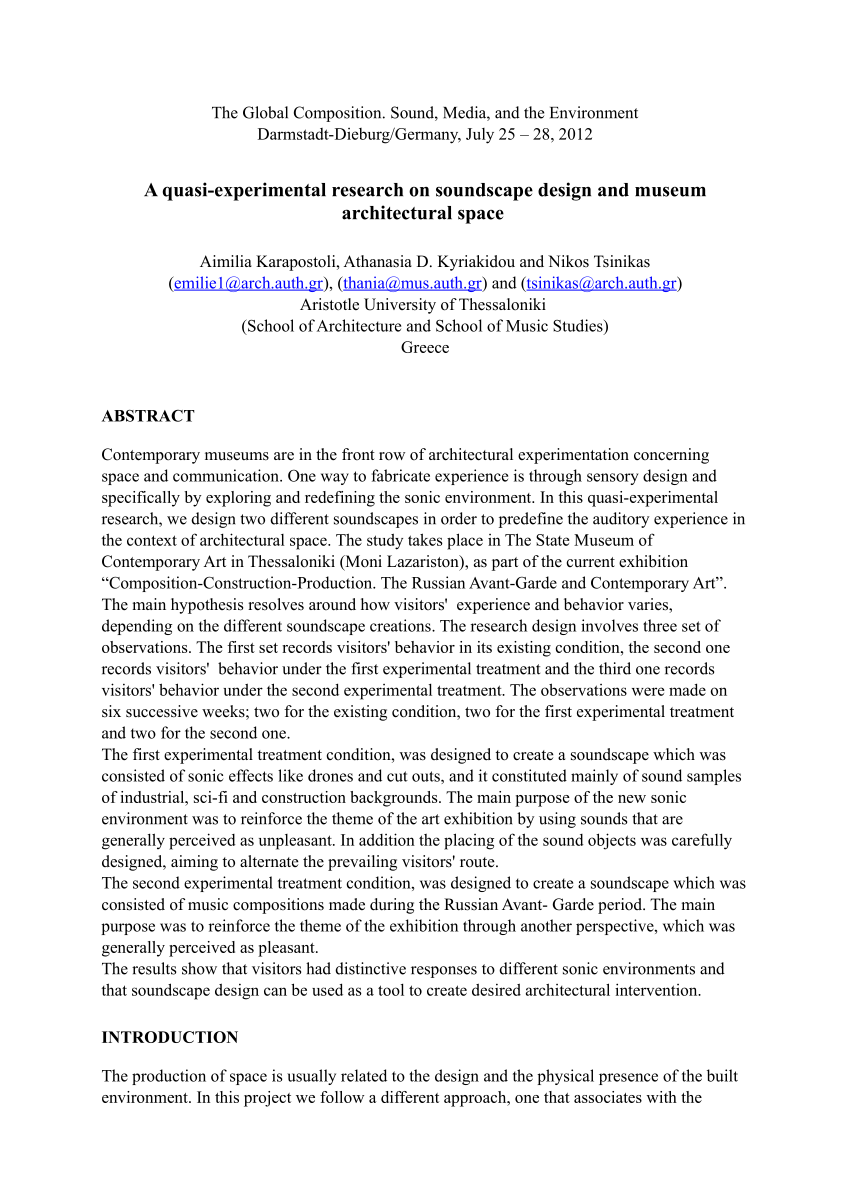

The prefix quasi means “resembling.” Thus quasi-experimental research is research that resembles experimental research but is not true experimental research. Although the independent variable is manipulated, participants are not randomly assigned to conditions or orders of conditions (Cook & Campbell, 1979). Another alternative explanation for a change in the dependent variable in a pretest-posttest design is regression to the mean. This refers to the statistical fact that an individual who scores extremely on a variable on one occasion will tend to score less extremely on the next occasion.

Natural Experiments:

Appraising experimental research to determine the level of evidence - Wolters Kluwer

Appraising experimental research to determine the level of evidence.

Posted: Tue, 26 Jan 2021 08:00:00 GMT [source]

Of these 25, 15 studies were of category A, five studies were of category B, two studies were of category C, and no studies were of category D. Although there were no studies of category D (interrupted time-series analyses), three of the studies classified as category A had data collected that could have been analyzed as an interrupted time-series analysis. Nine of the 25 studies (36%) mentioned at least one of the potential limitations of the quasi-experimental study design. In the four-year period of IJMI publications reviewed by the authors, nine quasi-experimental studies among eight manuscripts were published. Of these nine, five studies were of category A, one of category B, one of category C, and two of category D. Two of the nine studies (22%) mentioned at least one of the potential limitations of the quasi-experimental study design.

Ethical reasons

In the most controlled situations within this design, the investigators might include elements of randomization or matching for individuals in the intervention or comparison site, to attempt to balance the covariate distribution. Implicit in this approach is the assumption that the greater the similarity between groups, the smaller the likelihood that confounding will threaten inferences of causality of effect for the intervention (33, 47). Thus, it is important to select this group or multiple groups with as much specificity as possible. This design involves studying the effects of an intervention or event that occurs naturally, without the researcher’s intervention. For example, a researcher might study the effects of a new law or policy that affects certain groups of people.

Explanatory Research – Types, Methods, Guide

Researchers left out certain variables that would play a crucial role in determining the growth of each city. They used pre-existing groups of people based on research conducted in each city, rather than random groups. A quasi-experimental design allows researchers to take advantage of previously collected data and use it in their study. This method is used to compare the outcomes of participants who fall on either side of a predetermined cutoff point. This method can help researchers determine whether an intervention had a significant impact on the target population. This method is used to examine the impact of an intervention or treatment over time by comparing data collected before and after the intervention or treatment.

Types of Quasi-Experimental Designs

For example, O1 could be pharmacy costs prior to the intervention, X could be the introduction of a pharmacy order-entry system, and O2 could be the pharmacy costs following the intervention. Including a pretest provides some information about what the pharmacy costs would have been had the intervention not occurred. In medical informatics, what often triggers the development and implementation of an intervention is a rise in the rate above the mean or norm. For example, increasing pharmacy costs and adverse events may prompt hospital informatics personnel to design and implement pharmacy order-entry systems. However, often informatics personnel and hospital administrators cannot wait passively for this decline to occur. Therefore, hospital personnel often implement one or more interventions, and if a decline in the rate occurs, they may mistakenly conclude that the decline is causally related to the intervention.

In a study to determine the economic impact of government reforms in an economically developing country, the government decided to test whether creating reforms directed at small businesses or luring foreign investments would spur the most economic development. After a year, the researchers assessed the performance of each start-up company to determine growth. The results indicated that the tech start-ups were further along in their growth than the textile companies. The choice between these items (again, only one can be answered “yes”) is key to understanding the basis for inferring causality.

Qualitative Research Methods

“Resemblance” is the definition of “quasi.” Individuals are not randomly allocated to conditions or orders of conditions, even though the regression analysis is changed. As a result, quasi-experimental research is research that appears to be experimental but is not. This is a hybrid of experimental and quasi-experimental methods and is used to leverage the best qualities of the two. Like the true experiment design, nonequivalent group design uses pre-existing groups believed to be comparable.

The Use and Interpretation of Quasi-Experimental Studies in Medical Informatics

This type of design does not completely eliminate the possibility of confounding variables, however. Something could occur at one of the schools but not the other (e.g., a student drug overdose), so students at the first school would be affected by it while students at the other school would not. Ethical considerations typically will not allow random withholding of an intervention with known efficacy.

Ethical concerns often arise in research when randomizing participants to different groups could potentially deny individuals access to beneficial treatments or interventions. In such cases, quasi-experimental designs provide an ethical alternative, allowing researchers to study the impact of interventions without depriving anyone of potential benefits. One potential threat to internal validity in experiments occurs when participants either drop out of the study or refuse to participate in the study. If particular types of individuals drop out or refuse to participate more often than individuals with other characteristics, this is called differential attrition. For example, suppose an experiment was conducted to assess the effects of a new reading curriculum.

Another important threat to establishing causality is regression to the mean.12,13,14 This widespread statistical phenomenon can result in wrongly concluding that an effect is due to the intervention when in reality it is due to chance. The phenomenon was first described in 1886 by Francis Galton who measured the adult height of children and their parents. He noted that when the average height of the parents was greater than the mean of the population, the children tended to be shorter than their parents, and conversely, when the average height of the parents was shorter than the population mean, the children tended to be taller than their parents.

Instead, researchers can select two comparable classes, one receiving the math app intervention and the other continuing with traditional teaching methods. By comparing the performance of the two groups, researchers can draw conclusions about the app’s effectiveness. This design involves measuring the dependent variable multiple times before and after the introduction of an intervention or treatment. By comparing the trends in the dependent variable, researchers can infer the impact of the intervention. This design involves selecting pre-existing groups that differ in some key characteristics and comparing their responses to the independent variable. Although the researcher does not randomly assign the groups, they can still examine the effects of the independent variable.

Obtaining pretest measurements on both the intervention and control groups allows one to assess the initial comparability of the groups. The assumption is that if the intervention and the control groups are similar at the pretest, the smaller the likelihood there is of important confounding variables differing between the two groups. This design involves the inclusion of a nonequivalent dependent variable (b) in addition to the primary dependent variable (a). Variables a and b should assess similar constructs; that is, the two measures should be affected by similar factors and confounding variables except for the effect of the intervention. Taking our example, variable a could be pharmacy costs and variable b could be the length of stay of patients. If our informatics intervention is aimed at decreasing pharmacy costs, we would expect to observe a decrease in pharmacy costs but not in the average length of stay of patients.

The answers to this question will be less important if the researchers of the original study used a method to control for any confounding, that is, used a credible quasi-experimental design. Clusters exist when observations are nested within higher level organizational units or structures for implementing an intervention or data collected; typically, observations within clusters will be more similar with respect to outcomes of interest than observations between clusters. Clustering is a natural consequence of many methods of nonrandomized assignment/designation because of the way in which many interventions are implemented. Analyses of clustered data that do not take clustering into account will tend to overestimate the precision of effect estimates. Some of the study designs described in parts 1 and 2 may seem similar, for example, DID and CBA, although they are labeled differently.

The leaders attended a six-week workshop on leadership style, team management, and employee motivation. Since every grade had two math teachers, each teacher used one of the two apps for three months. They then gave the students the same math exams and compared the results to determine which app was most effective.

Unfortunately, one often cannot conclude this with a high degree of certainty because there may be other explanations for why the posttest scores are better. Perhaps an antidrug program aired on television and many of the students watched it, or perhaps a celebrity died of a drug overdose and many of the students heard about it. Participants might have changed between the pretest and the posttest in ways that they were going to anyway because they are growing and learning. If it were a yearlong program, participants might become less impulsive or better reasoners and this might be responsible for the change. Using the pharmacy order-entry system example, it may be difficult to randomize use of the system to only certain locations in a hospital or portions of certain locations.

No comments:

Post a Comment